Automated decision systems can have a significant impact on our lives. They can determine loan approvals, hiring decisions, or even court sentences.

More importantly, these automation solutions can affect our autonomy, that is, our capacity to make authentic and self-governing decisions. They can make decisions that deprive us of our control and responsibility as self-agents.

As they can operate in a way we cannot legitimately endorse, we might need to defend ourselves from them.

Here’s how as users we can claim our rights, and how designers can make these systems work for us.

The rights of automated decision system users

Imagine a woman who willingly serves in her marriage as the subservient of her husband. Let’s call her Victoria.

Victoria stays at home, raises her children by herself, and doesn’t grow any career aspirations. She’s happy being a stay-at-home mom and meeting the needs of her family. Moreover, she often reflects on her values, confront her opinions to other diversely minded women, and is definitely convinced to live a life that fits her core value.

Can Victoria be considered autonomous? Her case shows how tricky the question of autonomy is. Ethics experts base themselves on two levels to assess a person’s autonomy :

The first level is about psychological autonomy. According to John Christman, to be autonomous, one needs to have the psychological capabilities to make decisions by themselves. They need to be able to entertain and form fundamental values and preferences for themselves. They need to be able to think critically about these values, justify them in front of others, and act according to them.

But that’s not all. Autonomous people are also able to change their minds when realizing that their current situation is alienating them. They are able to assess whether their values and preferences are consistent with their biographical narrative. In other words, we would find their actions authentic to their beliefs and sense of self.

When looking at Victoria’s case, she seems to meet all these requirements. She has cultivated during her life fundamental values and preferences (valuing her family and taking care of it) and she’s able to reflect on them. She has also created a narrative that is consistent with her sense of self: she thinks that a woman should be subservient in marriage to educate kids within a stable and nurturing home.

Nevertheless, some might think with a modern look that Victoria is not free of her choice, and could lead a life with greater possibilities. So what’s missing?

When assessing a person’s autonomy, another ethics expert –Marina Oshana– takes the perspective of the social structure in which individuals are involved. She thinks that people are autonomous depending on the way relationships and norms are defining their roles.

In the case of Victoria, she grew up in a society where only one ideal persisted: a caring woman fully dedicated to her family. She couldn’t fit in another social role, as her community would immediately judge her and excommunicate her. As a result, she couldn’t take control of her professional, educational, and social life. She lacked the social support that would help her explore all the fulfilling possibilities.

When it comes to restricting or sustaining these social opportunities, algorithms can play a significant role.

What makes automated decision technologies harmful

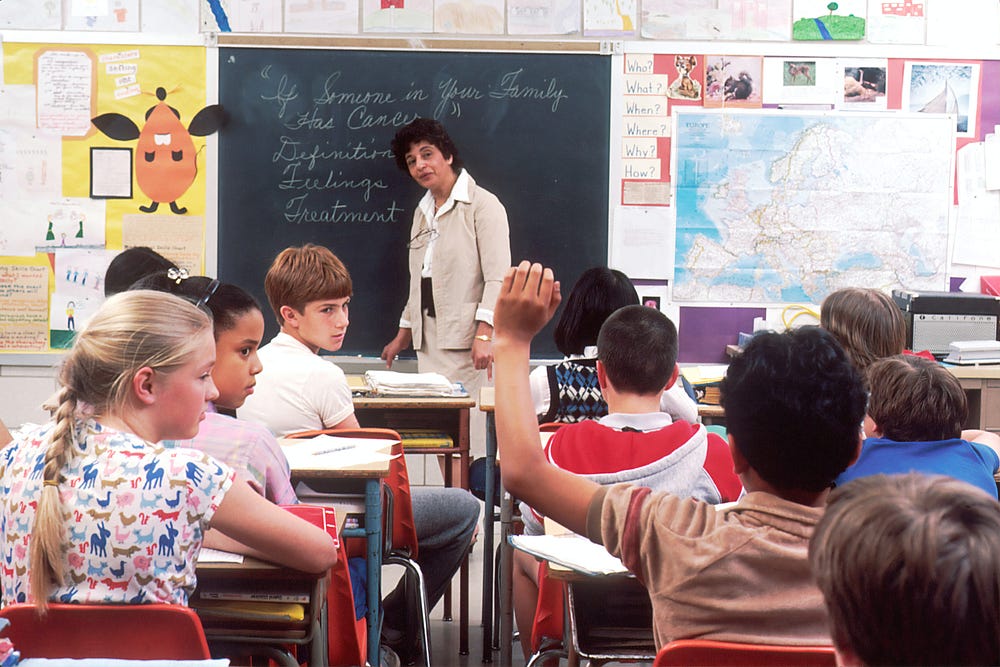

Let’s now imagine that Victoria wants to leave her house and become a successful teacher.

She joins a school and starts giving her first lectures to her students. She also finds that her school is using a performance assessment tool called IMPACT (which was implemented in D.C public schools in the US). This solution determines every semester a score by comparing her student’s rating with the rating of all the students in other classes and schools in the district.

This score is then used by the school to define her compensation and her position at the school. It helps to decide whether she can advance in her career or regress.

In this sense, it’s no wonder that Victoria wants to be sure that she can endorse such an automated system.

To figure out how it can impact her autonomy, she can rely on four factors :

- Reliability: is the automated algorithm accurate in its calculations and reflects her real situation or performance?

- Relevance: does its assessment rely on factors that are in her control and responsibility?

- Impact: what consequences do its decision entail for her?

- Relative Burden: does it involve bias that favors some social groups with distinctive features from others?

In this case, Victoria finds that IMPACT is running its calculations on frequent mistakes and accuracies. She finds that her IMPACT score can be enhanced or reduced if she takes actions unrelated to her job (like artificially raising her students’ ratings).

She realizes that the algorithm output significantly impacts her life. Furthermore, she sees that her student’s rating highly depends on her student’s social status, as low-income neighborhoods often mean lower average ratings.

For all these reasons, she can’t reasonably endorse such a system to evaluate her teaching skills. And her colleagues might certainly agree with her.

What could make an algorithm of that kind more acceptable to its users?

How can automated decision system designers preserve our self-agency

Now Victoria is even more frustrated. Over the years, she has grown her teaching skills and now wants to teach at university. But she just found out that IMPACT decided to refuse her tenure at a nearby university.

More concerning, she doesn’t even know why, and can’t access this information through legal means. The private companies that manage the algorithm are not required to provide a specific reason to individuals impacted.

For the same reason, she can’t edit and rectify mistakes or miscalculations that have been made on her accounts. She can’t even object to the use of IMPACT in such high stake decisions.

As a result, Victoria feels like losing her right to exercise her self-agency. With fellow teachers, she launches a civil disobedience movement that claims the teacher’s self-governance. The movement argues that teachers should first and foremost choose and have a say in the automation solution that is impacting their work.

It offers several recommendations to the IMPACT company.

The designers of IMPACT should first increase the algorithm reliability, to minimize error rates. They should only take into account factors in the control of teachers and relevant to their role. They should also thoroughly check the algorithm’s output for any bias based on external factors (age, gender, origin, location). But that’s not the only measure they should take.

As the primary user of the solution, Victoria and her fellow teachers advocate that they have the right to control the way it is used. They have the right to access the data that the algorithm relies on to make a decision and to check for possible errors. They have the right to control which data they are providing and to edit this information when a mistake is made.

Finally, they have the right to object collectively -as the teacher community- to the use of this automation tool, and individually to ask for human supervision and verification.

What can this story teach designers of automation solutions that substantially affect their users’ life?

- Increase the transparency of AI-based decisions by delivering counterfactuals: he or she could have had a better chance of getting this position if x.

- Provide users with access to at-stake data and features to edit and rectify mistakes.

- Give them a way to object to an automated decision and add human intervention and supervision.

- Check for external variables that matter in the calculation and try to mitigate bias as much as possible.

Individuals subjected to automated decision systems want to be in the loop. We sure need to make them feel that they are part of the decision -even though they don’t have the final say.