It’s a well-known problem for aircraft pilots: when the weather is foggy or cloudy, landing is hard.

Automated landing systems emerged to help them operate planes in low visibility conditions.

Yet, despite proven records, aircraft pilots are rarely turning on autoland to make for a perfect landing. They feel they don’t have a grip on the automated system.

As average company workers, we can also feel like modern pilots. New automation solutions are being implemented every day, and we feel like we’re losing control.

Sure, these new tools can feel like a necessary step towards better reliability and safety. But if you’re like me, your inner voice might resist these self-fulfilling visions.

You might even wonder: why should engineering calculations have a better say than field and hands-on workers on company decisions?

And that feeling brings a valid point to the debate. We have every right to keep and maintain a sense of control over newly adopted technology. Here’s why.

AI vs human expertise

Automation innovators are often vocal about the benefits their solutions provide. And that’s understandable. You just have to take a look at the big promoters of self-driving cars.

One of them is Paul Newman, cofounder of a self-driving startup, who shares a positivist view on this topic. As autonomous cars are less prone to human error, fatigue, and distraction (which cause 80% of annual accidents), Newman believes they can bring a renewed sense of safety on the road.

And if the dream of autonomous vehicles is not yet ready, he thinks it’s still around the corner. The many sensors, lasers, and radars will soon extract enough data to drive better than humans.

What about professional truck drivers that are proving their skills on the road? They’ll have to gradually give up their seat to more reliable, autonomous trucks.

These views are consistent with the opinions of automation theorists and leaders. They usually think that AI will make the world safer by taking over human-decision making. As machine-learning models are fed with billions of patterns, they’ll be able to hard-code human values and make the most beneficial decisions for everyone.

If that’s a quite hopeful and reassuring story, it still misses an essential requirement for technology adoption. They assume that the drive for new technology is only a matter of cost/benefits analysis, neglecting the importance of societal debates.

Like autonomous trucking companies, they only put the opportunity cost and efficiency gain in the balance when considering the implementation of automation. They don’t consider the views of professional truck drivers, the resistance from regulators, and the potential impact on public service.

But why would automation designers have the last words over human specialists and involved decision-makers? There’s no good reason to defend this.

Automation solution and professional responsibility

Healthcare is one of those sectors where innovations are coming out every day. And rightly so, as the promises of data-driven medicine can bring life-changing treatments for diseases.

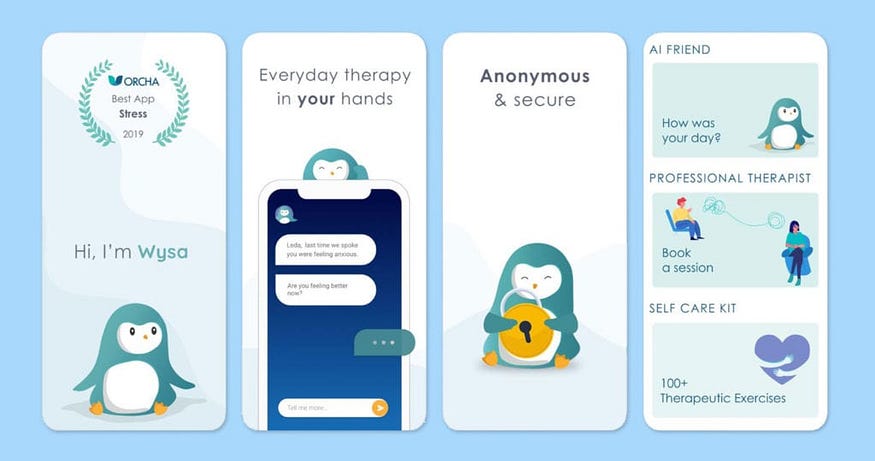

Wellness and mental health apps are two major innovation paths, that seek to provide affordable and around-the-clock psychological counseling. Based on user data and generative language models, they can assist patients to set up self-care routines, track their feelings, and advise them on their behavior. They help them when it’s difficult or intimidating to reach out to a specialist.

Those AI-augmented solutions are beneficial healthcare alternatives, but who knows which prior assumptions they are carrying? That is especially concerning when you know that these apps are dealing with vulnerable users.

That’s the case for many automation solutions put into the market. They all bring with them politically-critical human bias and assumptions

For example, those mental health apps might convey mostly male-focused psychological guidance or heavily depend on the data set they are trained on.

If professional therapists are not included in the process, these systems question their responsibility. Someone has to be responsible for providing reliable mental health treatments, but who else can other than psychologists?

No wonder then that in the health sector, self-governance is key when it comes to implementing new technologies. For example, in the American healthcare industry, state licensing boards of physicians determine which types of devices are permissible.

In the same way, nurses can choose how to change their work organization. They can consider the consequences of implementing social robots to care for the elderly. They can argue that caring for patients is a pure human responsibility and that robots could remove their control over their practice.

In the educational sector, however, things are different. Teachers in the US still don’t have the power to defend their human expertise. They can’t use their bargaining power to assess the impact of AI and technology adoption in the classroom. So learning software and devices are being introduced by the administrative authorities without further consideration.

In that case, one way to empower teachers would be to create boards that assess the impact and regulate every educational robot put on the market. Automation should be a democratic process where every profession has a say on how they want to structure their activity.

You could see all these initiatives as slowing down the technological pace of progress. You wouldn’t be wrong.

But it’s also a way to defend and reclaim human control. Because only human experts can be accountable, prevent biases, and set rules.

And that’s why we need to put human-machine interaction into perspective.

The new laws of robotics

You might know Isaac Asimov’s “Three Laws of Robotics” :

“1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given it by human beings, except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.”

As visionary as these laws were, they can no longer address the ethical conundrums automation makers and legislators are facing today. The fast advancements of AI technologies make algorithms generate human language, better predict our preferences, and shape our opinions.

With the rapid pace of technology, some AI experts even fear the emergence of a super-intelligent entity. If that’s still a distant threat given ML models’ narrow intelligence, we should consider a way to safely interact with these future entities.

Inspired by game theory, assistance game theory seeks to comprehend how an intelligent robot would relate to its human counterpart. Considering a super-intelligent robot without predefined goals, which values would you hard code to ensure it doesn’t get out of hand?

One way to do it is to keep the owner’s preference unknown and uncertain. Robots should never completely know our preferences. They would have to guess them while watching our behavior.

Why is that? So they wouldn’t mind being switched off, as it would be a learning signal. If the owner switches it off, the robot would get additional information about the owner’s preferences (that he didn’t like what it has just done).

What makes this argument interesting is that this robot would never be completely autonomous. They would always rely on human input. Even when they would get an almost picture of the owner’s preference, they will still need permission in case preferences have changed.

On the other hand, we as robot owners would always keep control of the system while enjoying their unlimited services. Moreover, as the robot’s understanding grows, it will realize how much we value personal autonomy and self-reliance. It will give us more room to be on our own and decline its services.

That reasoning also applies to the use of automation solutions. Automation should never be fully autonomous. It should always align with our preferences, whatever they are. And one of them is about autonomy, agency, and control.

We have a fundamental preference for agency over passivity. We value choosing our own goals, even though it’s not always the perfect choice in the utilitarian sense.

That matters both for professionals defending their profession and people valuing their personal preferences. And this is a right robot should have to live with.